Hot and cold running content is what draws visitors to your web site.

Too often, getting visitors from search engines is boiled down to a succession of tweaks that may or may not work. But, as I show in this hack, solid content thoughtfully put together can make more of an impact than a decade's worth of fiddling with META tags and building the perfect title page.

Following these 26 steps from A to Z will guarantee a successful site, bringing in plenty of visitors from Google.

A. Prep Work

Prepare work and begin to build content. Long before the domain name is settled on, start putting together notes to build a site of at least 100 pages. That's 100 pages of "real content," not including link, resource, about, and copyright pages, which are necessary, but not content-rich, pages.

Can't think of 100 pages' worth of content? Consider articles about your business or industry, Q&A pages, or back issues of an online newsletter.

B. Choose a Brandable Domain Name

Choose a domain name that's easily brandable. For example, choose something like Google.com and not .com.

Keyword domains are out; branding and name recognition are in. Big time in. Keywords in a domain name have never meant less to search engines. Consider Goto.com becoming Overture.com, and understand why it was changed. It's one of the most powerful gut check calls I've ever seen on the Internet. It took resolve and nerve to blow away several years of branding. (That's a whole 'nother article, but learn the lesson as it applies to all of us.)

C. Site Design

The simpler your site design, the better. As a rule, text content should outweigh HTML content. The pages should be validated and usable in everything from Lynx to leading browsers. In other words, keep it close to HTML 3.2 if you can. Spiders do not yet like eating HTML 4.0 and the mess that it can bring. Stay away from heavy Flash, Java, or JavaScript.

Go external with scripting languages if you must have them, though there's little reason to have them that I can see. They rarely help a site and can actually hurt it greatly due to many factors that most people don't appreciate (the search engines' distaste for JavaScript is just one of them). Arrange the site in a logical manner with directory names hitting the top keywords that you want to emphasize. You can also go the other route and just throw everything in the top level of the directory (this is rather controversial, but it's produced good long-term results across many engines). Don't clutter or spam your site with frivolous links such as "best viewed in...", or other things such as counters. Keep it clean and professional to the best of your ability.

Learn the lesson of Google itself: simple is retro cool. Simple is what surfers want.

Speed isn't everything; it's the only thing. Your site should respond almost instantly to a request. If your site has three to four seconds' delay until "something happens" in the browser, you're in trouble. That three to four seconds of response time may vary in sites viewed in countries other than your native one. The site should respond locally within three to four seconds (maximum) to any request. Longer than that, and you'll lose 10 percent of your audience for each additional second. That 10 percent could be the difference between success and failure.

D. Page Size

The smaller the page size, the better. Keep it under 15 KB, including images, if you can. The smaller the better. Keep it under 12 KB if you can. The smaller the better. Keep it under 10 KB if you can. I trust you are getting the idea here. Over 5 KB and under 10 KB. It's tough to do, but it's worth the effort. Remember, 80 percent of your surfers will be at 56 KB or less.

E. Content

Build one page of content (between 200 and 500 words) per day and put it online.

If you aren't sure what you need for content, start with the Overture keyword suggestor (http://inventory.overture.com/d/searchinventory/suggestion/) and find the core set of keywords for your topic area. Those are your subject starters.

F. Keyword Density and Keyword Positioning

This is simple, old-fashioned Search Engine Optimization (SEO) from the ground up.

Use the keyword once in the title, once in the description tag, once in a heading, once in the URL, once in bold, once in italic, and once high on the page, and make sure the density is between 5 and 20 percent (don't fret about it). Use well-written sentences and spellcheck them! Spellchecking is becoming more important as search engines are moving toward autocorrection during searches. There is no longer a reason to look like you can't spell.

G. Outbound Links

From every page, link to one or two high-ranking sites under the keyword you're trying to emphasize. Use your keyword in the link text (this is ultra-important for the future).

H. Cross-Links

Cross-links are links within the same site.

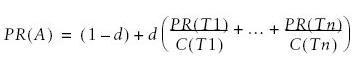

Link to on-topic quality content across your site. If a page is about food, make sure it links to you apples page and your veggies page. With Google, on-topic cross-linking is important for sharing your PageRank value across your site. You do not want an all-star page that outperforms the rest of your site. You want 50 pages that produce 1 referral each a day, not 1 page that produces 50 referrals each day. If you find a page that drastically outproduces the rest of the site with Google, you need to offload some of that PageRank value to other pages by cross-linking heavily. It's that old share-the-wealth thing.

I. Put It Online

Don't go with virtual hosting; go with a standalone IP address.

Make sure the site is crawlable by a spider. All pages should be linked to more than one other page on your site, and not more than two levels deep from the top directory. Link the topic vertically as much as possible back to the top directory. A menu that is present on every page should link to your site's main topic index pages (the doorways and logical navigation system that lead to real content). Don't put your site online before it is ready. It's worse to put a nothing site online than no site at all. You want it to be fleshed out from the start.

Go for a listing in the Open Directory Project (ODP) (http://dmoz.org/add.html). Getting accepted to the ODP will probably get your pages listed in the Google Directory.

J. Submit

Submit your main URL to Google, F*, AltaVista, WiseNut, Teoma, DirectHit, and Hotbot. Now comes the hard part: forget about submissions for the next six months. That's right, submit and forget.

K. Logging and Tracking

Get a quality logger/tracker that can do justice to inbound referrals based on logfiles. Don't use a graphic counter; you need a program that can provide much more information than that. If your host doesn't support referrers, back up and get a new host. You can't run a modern site without full referrals available 24/7/365 in real time.

L. Spiderings

Watch for spiders from search engines (one reason you need a good logger and tracker!). Make sure that spiders crawling the full site can do so easily. If not, double-check your linking system to make sure the spider can find its way throughout the site. Don't fret if it takes two spiderings to complete your whole site for Google or F*. Other search engines are potluck; if you haven't been added within six months, it's doubtful you'll be added at all.

M. Topic Directories

Almost every keyword sector has an authority hub on its topic. Find it (Google Directory can be very helpful here because you can view sites based on how popular they are) and submit within the guidelines.

N. Links

Look around your keyword section in the Google Directory; this is best done after getting an Open Directory Project listingor two. Find sites that have link pages or freely exchange links. Simply request a swap. Put a page of on-topic, in-context links on your site as a collection spot. Don't worry if you can't get people to swap links; move on. Try to swap links with one fresh site a day. A simple personal email is enough. Stay low-key about it and don't worry if site Z doesn't link to you. Eventually it will.

O. Content

Add one page of quality content per day. Timely, topical articles are always best. Try to stay away from too much blogging of personal material and look more for article topics that a general audience will like. Hone your writing skills and read up on the right style of web speak that tends to work with the fast-and-furious web crowd: lots of text breaksshort sentenceslots of dashessomething that reads quickly.

Most web users don't actually read; they scan. This is why it is so important to keep key pages to a minimum. If people see a huge overblown page, a portion of them will hit the Back button before trying to decipher it. They have better things to do than waste 15 seconds (a stretch) at understanding your whizbang menu system. Just because some big support site can run Flash-heavy pages, this does not mean that you can. You don't have the pull factor that they do.

Use headers and bold standout text liberally on your pages as logical separators. I call them scanner stoppers because the eye logically comes to rest on the page.

P. Gimmicks

Stay far away from fads of the day or anything that appears spammy, unethical, or tricky. Plant yourself firmly on the high ground in the middle of the road.

Q. Linkbacks

When you receive requests for links, check out the sites before linking back to them. Check them through Google for their PageRank value. Look for directory listings. Don't link back to junk just because you were asked. Make sure they're sites similar to yours and on-topic. Linking to bad neighborhoods, as Google calls them, can actually cost you PageRank points.

R. Rounding Out Your Offerings

Use options such as "email a friend," forums, and mailing lists to round out your site's offerings. Hit the top forums in your market and read, read, read until your eyes hurt. Stay away from affiliate fades that insert content onto your site such as banners and pop-up windows.

S. Beware of Flyer and Brochure Syndrome

If you have an economical site or online version of bricks and mortar, be careful not to turn your site into a brochure. These don't work at all. Think about what people want. They don't come to your site to view your content, they come to your site looking for their content. Talk as little about your products and yourself as possible in articles (sounds counterintuitive, doesn't it?).

T. Keep Building One Page of Content Per Day

Head back to the Overture suggestion tool (http://inventory.overture.com/d/searchinventory/suggestion/) to get ideas for fresh pages.

U. Study Those Logs

After a month or two, you will start to see a few referrals from places you were able to get listed. Look for the keywords people are using. See any bizarre combinations? Why are people using them to find your site? If there is something you have overlooked, then build a page around that topic. Engineer your site to feed the search engine what it wants. If your site is about oranges, but your referrals are about orange citrus fruit, then get busy building articles around citrus and fruit instead of the generic oranges. The search engines tell you exactly what they want to be fed. Listen closely! There is gold in referral logs; it's just a matter of panning for it.

V. Timely Topics

Nothing breeds success like success. Stay abreast of developments in your topic of interest. If big site Z is coming out with product A at the end of the year, build a page and have it ready in October so that search engines get it by December.

W. Friends and Family

Networking is critical to the success of a site. This is where all that time you spend in forums pays off. Here's the catch-22 about forums: lurking is almost useless. The value of a forum is in the interaction with your colleagues and cohorts. You learn from the interaction, not just by reading. Networking pays off in linkbacks, tips, and email exchanges, and generally puts you in the loop of your keyword sector.

X. Notes, Notes, Notes

If you build one page per day, you will find that brainstorm-like inspiration will hit you in the head at some magic point. Whether you are in the shower (dry off first), driving (please pull over), or just parked at your desk, write it down! If you don't, then 10 minutes later, you will have forgotten all about that great idea. Write it down and get specific about what you are thinking. When the inspirational juices are no longer flowing, come back to those content ideas. It sounds simple, but it's a lifesaver when the ideas stop coming.

Y. Submission Check at Six Months

After six months, walk back through your submissions and see if you have been listed in all the search engines you submitted to. If not, resubmit and forget again. Try those freebie directories again, too.

Z. Keep Building Those Pages of Quality Content!

Starting to see a theme here? Google loves content, lots of quality content. The content you generate should be based on a variety of keywords. After a year, you should have around 400 pages of content. This will get you good placement under a wide range of keywords, generate reciprocal links, and position your site to stand on its own two feet.

Do these 26 things, and I guarantee you that in one year's time, you will call your site a success. It will draw between 500 and 2,000 referrals a day from search engines. If you build a good site and achieve an average of 4 to 5 page views per visitor, you should be in the range of 1015 KB page views per day in one year's time. What you do with that traffic is up to you!